What is Concurrent Programming

A computer program comprises a set of instructions designed to be executed on a computer, producing specific output upon completion. Each instruction represents a distinct computational task.

Typically, in sequential programming, multiple computational tasks are executed in a linear fashion. This means that only one task is executed at any given time, allowing all computer resources, including memory and processing power, to be exclusively dedicated to that particular task's execution.

Conversely, concurrent programming refers to a programming technique where two or more computational tasks are executed in an interleaved manner through context switching. These tasks are completed within an overlapping timeframe, while carefully managing access to shared resources such as computer memory and processing cores.

Despite the presence of multiple cores in modern computers, the demand for concurrent processing remains crucial due to the need to concurrently run numerous tasks, potentially numbering in the hundreds or thousands, within a computing process.

Concurrency != Parallelism

In the realm of computing, parallelism plays a significant role in enabling tasks to be completed within overlapping time intervals. However, it's important to differentiate between the concepts of parallelism and concurrency. While both aim to improve task execution, they employ distinct approaches.

Parallelism, unlike concurrency, doesn't rely on sharing computational resources through context switching. Instead, parallel computing focuses on dividing a complex task into smaller, independent sub-tasks and processing them simultaneously across multiple computing environments. These sub-tasks are executed in parallel, enabling the workload to be distributed and processed concurrently in overlapping time intervals.

By harnessing parallel computing, the goal is to achieve faster and more efficient execution by leveraging the power of multiple computing resources. Each independent computing environment handles its designated sub-task concurrently, allowing for parallel processing and ultimately expediting the overall execution of the original task.

Realms in the Arena

Shared Memory Model

Shared memory concurrency is a traditional approach to achieving concurrency in software systems. In this model, multiple threads or processes communicate and synchronize their operations through shared memory regions. Threads or processes can access and modify shared data, allowing for collaboration and coordination. However, this approach requires explicit synchronization mechanisms, such as locks or semaphores, to prevent data races and ensure data integrity.

Shared memory concurrency often involves complex coordination and can be prone to issues like deadlocks and race conditions. Nonetheless, it offers fine-grained control over resource sharing and is suitable for performance-critical tasks that require low-level manipulation.

Thread is a concrete implementation of the shared memory model.

Message Passing Model

In contrast, message-passing concurrency is a different paradigm that focuses on communication and coordination between independent processes or actors. Instead of sharing memory, processes communicate by sending messages to each other. Each process has its own isolated memory space, and communication occurs through explicit message exchanges. Message-passing concurrency provides a more decoupled and distributed approach to concurrency, as processes do not directly access shared memory.

This model can offer better encapsulation and fault isolation, as processes are less likely to interfere with each other's state. However, message passing introduces overhead in terms of message serialization, deserialization, and communication between processes, which can impact performance.

Actor is a concrete implementation of the message-passing model.

Event Looping: The Novel, and Lightweight

On the other hand, event looping is a more modern and lightweight approach to concurrency, often used in event-driven programming models. It is based on the concept of an event loop, which efficiently manages and dispatches events or tasks in an asynchronous environment. Rather than relying on shared memory, event looping leverages non-blocking I/O operations and callbacks to handle events as they occur. This model is well-suited for applications that rely heavily on user interactions, system notifications, or external data sources. By using event loops, developers can design highly responsive and scalable systems, as the event-driven approach allows tasks to be executed concurrently without explicit synchronization or shared state. However, event looping may not be suitable for scenarios that require extensive resource sharing or fine-grained control over synchronization.

Your Roadmap to Concurrency

Now, you probably have a concurrency issue in your application to solve and I know what you're thinking about. Choosing your way of solving the concurrency issue in your application isn't that straightforward. Because each of these approaches addresses different use cases and each of them has its bottlenecks. But, it may be not the biggest nightmare that you have to deal with.

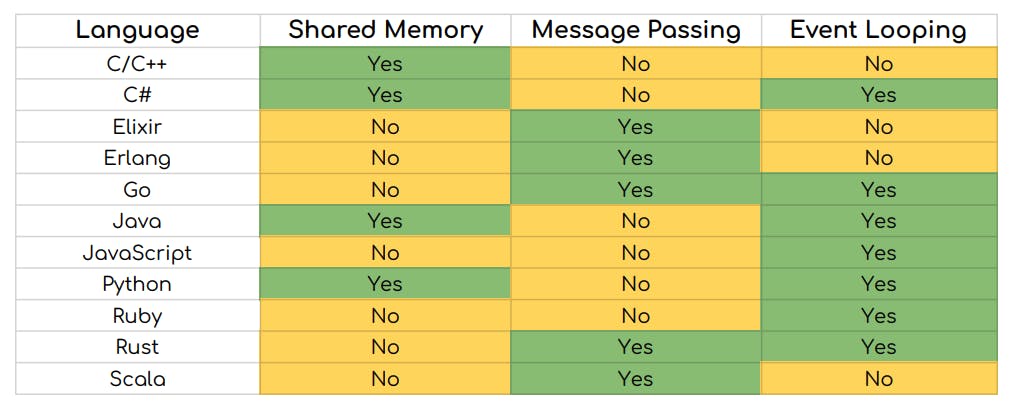

Programming languages do not support all three types of concurrency implementations. So, if you're in the middle of software development work and you are stuck with a programming language in a monolithic application, chances could be limited to choosing the concurrency approach that you want to implement.

Even though some of the approaches to concurrency are not supported yet in some of the programming languages, they do embrace the need of having different types of concurrency implementations.

Conclusion

Shared memory concurrency and message-passing concurrency are both based on the idea of achieving concurrency but differ in their approach to communication and synchronization. Shared memory concurrency offers fine-grained control over resource sharing but requires explicit synchronization mechanisms. In contrast, message-passing concurrency promotes decoupling and fault isolation but introduces communication overhead. Event looping, on the other hand, focuses on event-driven execution and asynchronous tasks, offering scalability and responsiveness without shared memory or explicit synchronization.

Choosing between these models depends on the specific requirements of the application. Shared memory concurrency is suitable for scenarios that demand fine-grained control over shared resources. Message-passing concurrency is useful when isolation and fault tolerance are critical. Event looping excels in event-driven scenarios where responsiveness and scalability are paramount. Developers must consider factors such as performance, complexity, ease of development, and the nature of the problem at hand when deciding on the appropriate concurrency model.